The aim

To automate easy decisions so SMEs can focus on the tough calls

The ask

DVS were asked to produce a data-assisted decision support application, which would calculate KPIs with RAG scores to present to decision-makers. This would help improve decision making on which supplier to use. Rather than simply visualising these on a new dashboard, our outputs would be integrated with existing tools so that the insight was in front of users at the point that decisions are made.

What does good look like?

As we started the process, we quickly discovered that KPIs were not consistently defined across the business. This was a concern as this product would have a significant impact on decisions at the core of the business.

To overcome this, we set up meetings to understand departmental needs. Then, we ran these through workshops with managers and executives to align and define a set of KPIs. We scrutinised these definitions so the business could agree on how edge cases would work, asking probing questions such as:

- How should we present the data where you have newer suppliers with less history?

- How should we weight the score for different metrics?

- What is the balance between quality and cost? How much more are you willing to pay for better ‘quality’ suppliers?

Alongside this, we understood that the way the data and returned RAG scores were interpreted would be crucial to the success of the project. As well as this, it was important to register that different metrics should be weighted differently to each other.

Technical pragmatism

At DVS, one of our key priorities is always to find the right balance between cost and performance. Our app has the proven ability to respond to requests in milliseconds, but we had to ask the question: “Is it good value to have additional compute on standby, to respond to concurrent requests instantly?”

In the end, we aligned technical and business sides of the organisation to agree on a balance of cost and performance, preferring consecutive requests over concurrency, which could reduce compute cost by as much as 90%.

The build

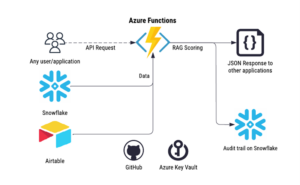

We wanted to build an application which could be owned, maintained and updated by the client, so naturally we developed and deployed into their existing Azure environment. Three Azure Functions were created, which could be run and monitored independently. The core Function was our ‘RAG Scoring’ application, which could be triggered by an HTTP request, and returned a JSON structure containing the KPIs and RAG scores.

Security & best practice

The applications were able to be secured behind Azure AD (which required any user to be registered with the client on Azure, as well as using multi-factor authentication).

Beyond that, we deployed our standard good practice in technical development, including storing secrets in Azure Key Vault and continuous integration through GitHub Actions.

Given the impact that our application could have on business decisions, it was critical that a record of KPIs and RAG scores were maintained. This was accomplished through triggering the API with a scheduled Function, which would store the output on a data warehouse.

The outcome

The new process has delivered better, faster decisions for the client. The capacity of the team has increased and allows the business to grow. The quality of the selections has increased too – next is to track the impact on their client NPS!

The future

This is the first step on a machine learning roadmap. We’ve built a behind-the-scenes ‘recommendation tool’ and are assessing where the model and the human process agree and disagree. The plan is to build trust in machine learning and automate as many easy decisions as possible.

Sweeping US Tariffs: What This Means for the UK